Last October Libby Reinish from the Free Software Foundation commissioned Urchin to make a short film in celebration of the FSF’s 30th anniversary, to support their annual funding campaign. We were thrilled at the chance to work on messaging we care about, that’s so close to our own mission. The short deadline and limited budget made for some interesting challenges. And of course we would produce this film (like everything else we do) with Free Software, especially Blender. You can use the embed code from the FSF, or Urchin’s as follows:

<iframe width="640" height="390" src="http://video.urchn.org/usrlib/" frameborder="0" allowfullscreen></iframe>

Script and Design

We started pre-production with only a target audience matrix related to technical knowledge and potential philosophical alignment. The FSF gave us a free hand to propose a script, so before starting to write, we took some time to consider and Fateh did a lot of interesting research. There were many more points we’d like to have included, but we had to be brutal in deciding what could be in the scope of this small project. We came away inspired to find ways of continuing the much needed work of free software messaging. (And actually, Fateh already has a very cool plan in development.)

The theme: User Lib!

One of the most important tenets of the FSF stood out: that this is predominantly a movement about people’s rights; it’s not just a programming methodology that favors openness and collaboration (though this is an awesome side-effect) or about writing better code — it is about making sure that users are in control of their computing, and not the other way around. A play on /usr/lib/ as ‘User Liberation’ that came out of our banter at Libre Planet provided the theme. (Side note: we are aware that /usr does not traditionally mean user, but punning logic prevails.)

Visual Pre-production

Once the bare bones of the script came into focus, I started working on on little tests in Krita and Blender, with the mantra to make cartoony motion, and flat shade all the things (no lights). Even light effects are done with materials at differing values and saturations.

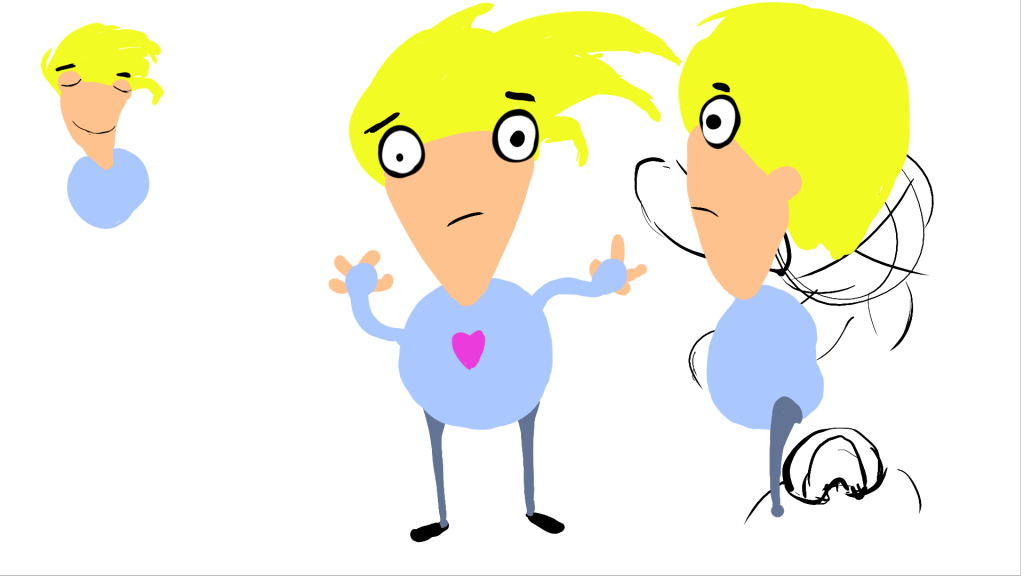

I also started thinking about our main character. It’s tricky to design a character without race or gender when pressed for time, but that was our goal. We named it ‘Mo’ (short for anything from Maureen to Mohammad) and called it “they” (neutral pronouns).

The “Go” decision

Once Fateh got approval from the FSF we switched into production with her great script to work from. (software: Piratepad, Gedit, Textplay and Trelby)

Production

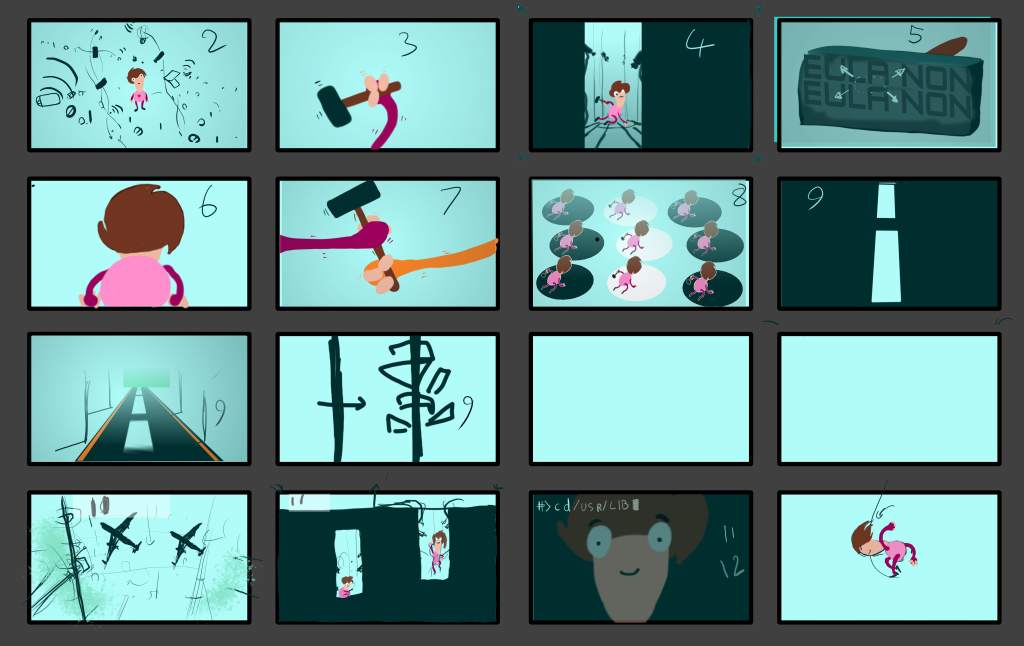

Boards

With very limited production time and a carefully prepared script it didn’t make sense to do elaborate boards and animatic: I’d rely on small thumbnails for the short, one per shot, and a very simple animatic with no animation. I used Krita to draw the boards, then cropped and exported into Blender for the animatic. Not much to say here except that I really like Krita- If it gains python scripting it’ll be perfect for me. When time permits in future preproductions, I might use the new grease pencil tools to animate on top of Krita Layers in Blender.

Scene Organization

With my decision to do cartoony animation came a little bit of problem. Blender typically allows you to link (or for Maya-speakers, reference) characters into scenes. However, this comes at a bit of cost: every deformer and many decisions have to be rigged in the library file: you can’t just slap an ad-hoc lattice onto your character, or make a shapekey specific to a shot, or delete or replace a mesh simply while animating: All those things must be rigged-in, and, given my short production time, that just didn’t feel practical. As a result, most shots have Mo local (though the rig is perfectly capable of being linked). The short production and relatively few shots made me feel fairly OK about this (it would be murder on a Wires For Empathy level of complexity though)

Shrinkwrap, Dependency Graph and Workarounds

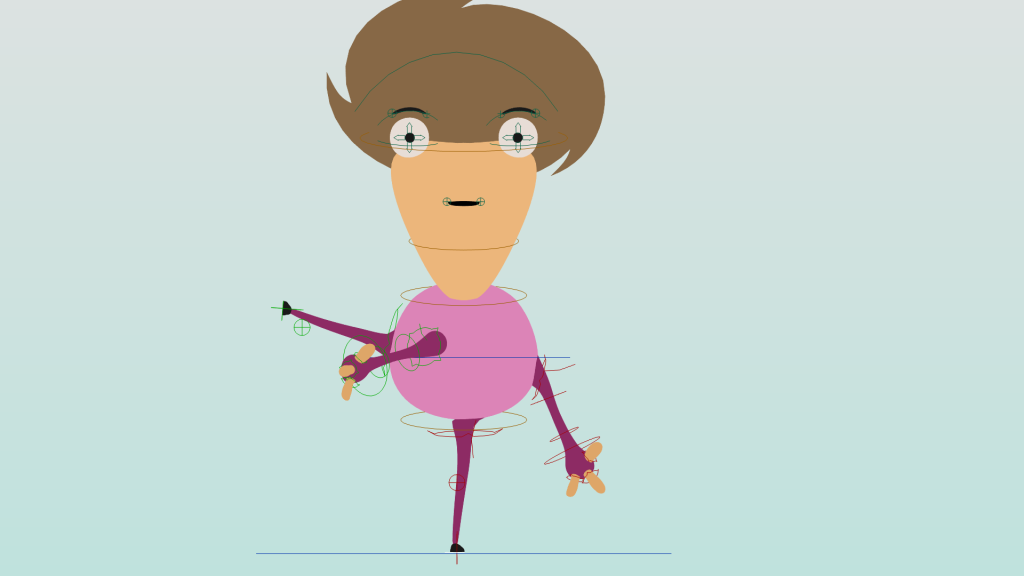

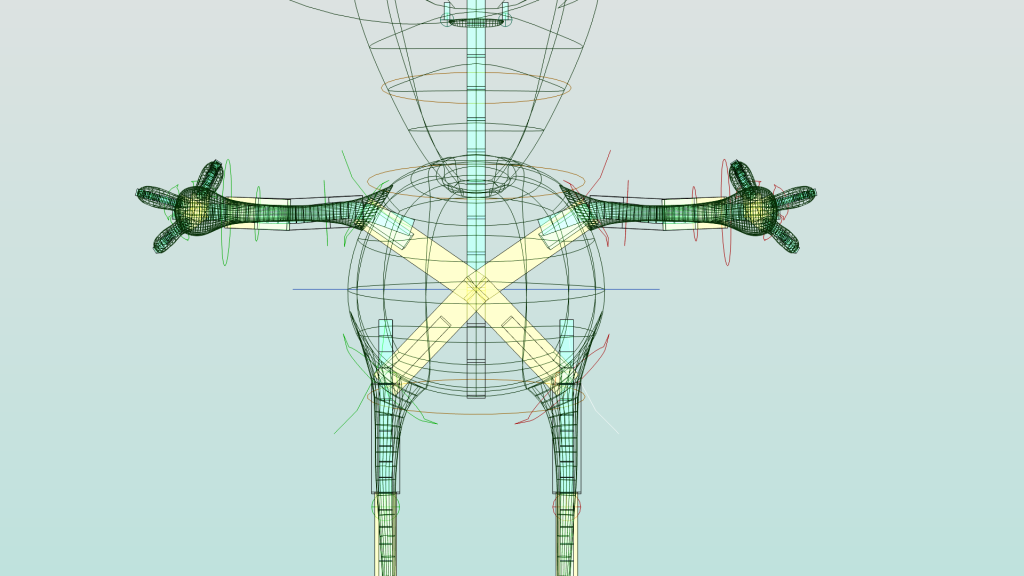

So, Mo is basically a hierarchy of Blobs and Limbs. You get the body-blob, with arms and legs and head sprouting off it, followed by (in the case of the arms) hand blobs, with little sausage fingers growing from them. There’s the minimum number of fingers, and every limb is supposed to move freely on the blob – an arm can be placed anywhere during the animation, so can a leg, and so can the finger on each hand. I compromised with the head and made it the only fixed element on the surface with the traditional chest-neck-head hierarchy.

Initially I wanted to shrinkwrap the arms to the surface, but this got me into Blender dependency graph limitations- You can’t break in and out of the armature dependency graph without creating an object-level cycle (sorry if this is greek). Luckily, Sergey is fixing this- but for now I used single bone IK chains to constrain the arms and legs to a sphere, and then added a movable bone so the animator could manually keep the arm on the surface. The mesh itself is shrinkwrapped, and the setup is far more forgiving than you’d think.Those cool yellow X-man logos on the body and the hands are actually what allows the limbs to travel around on their blobs…

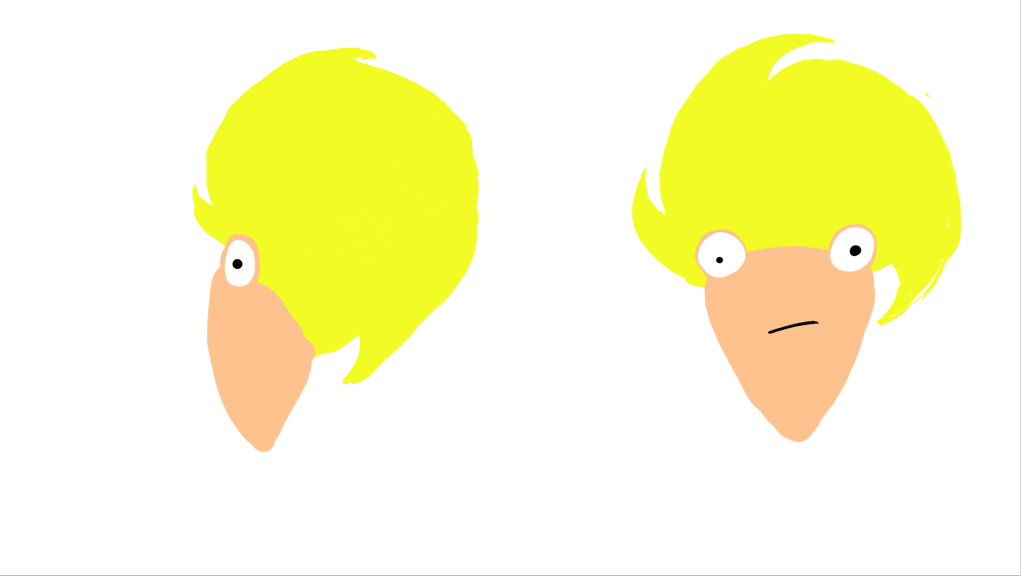

Hair

I wanted Mos hair to be a recognizable shape of a sphere/circle with 3 swirls coming out of it. My initial approach was to model this in 3D, but the resulting silhouette was always awkward depending on the camera angle. Attempts to fix this with rigging and shapekeys were time consuming and unsatisfying, so I ended up using the flat shading to my advantage, ditching the 3D hair and splitting the hair into a 3D ‘junction’ with the skin and eyes and a flat shape (with shapekeys for animation) that I could just place relative to the camera to get the desired shape. It worked brilliantly and I only used 3D hair in one shot, where there were too many Mos to adjust manually. In this shot however, Mos hair is the same back to front – this Mo had no face.

Broken Rigging

I know what you’re thinking, but that’s not what I’m talking about. As I did my first test of Mo hammering I found that, as I moved the shoulders for the extreme cartoony poses, this would totally break the arcs of the arms and hands (and hammer). I could just compensate by either moving the (hidden) shoulder on an arc, or counter-animating in the arms and hands.. but yuck. A simple arc motion gets into lengthy tweakage. Luckily, I’ve been introduced to the concept of broken rigs by the folks at Anzovin Studios, some of the best 2.5d animators and riggers around. The idea is to get away from 3D character hierarchies and FK/IK complexities, and just make every joint a sibling in world space. Animating a character feels more like 2D, freely sculpting its pose, and the lack of inheritance means you can finesse every arc on every joint without having to compensate for what the hierarchy is doing. With little time to create a full broken rig I did the next best thing: I added a broken mode to Mo’s arms in addition to the FK and IK controls. Then I animated the hammer strike again. It took a fraction of the time and was uber smooth. I became an instant convert. As I got into the ‘sometimes hierarchies are nice for posing’ issue, I created a quick script that allowed copying poses between broken and FK controls, so I could pose in FK and then keyframe in broken mode. Mo’s arms are keyframed with broken IK in most shots, there may be only one exception (incidentally, the above shot illustrates that outside of camera view, most of my animations look terrible)

Orbiting Particles

This is a story about letting go. I wanted the first shot to start with particle systems orbiting Mo ‘surrounded by software’ like an atom or a solar system. Here the age of Blender’s particle systems started to show. The best way I found to create the orbit was using hair guides, but they have a fatal limitation: the particle can only traverse the guide once in its lifetime. So unless you want super slow orbits (I didn’t) you get particles popping in and out of existence at the end of the guide. I struggled to fix this by coiling the guides so they were more than one loop, but this introduced a new type of popping where particles would flip 180 degrees and that was even more jarring. In the end, I just ‘lived with it’. If you examine the first shot closely, you can see this happen. Also some of the particles go through Mo’s head 😉

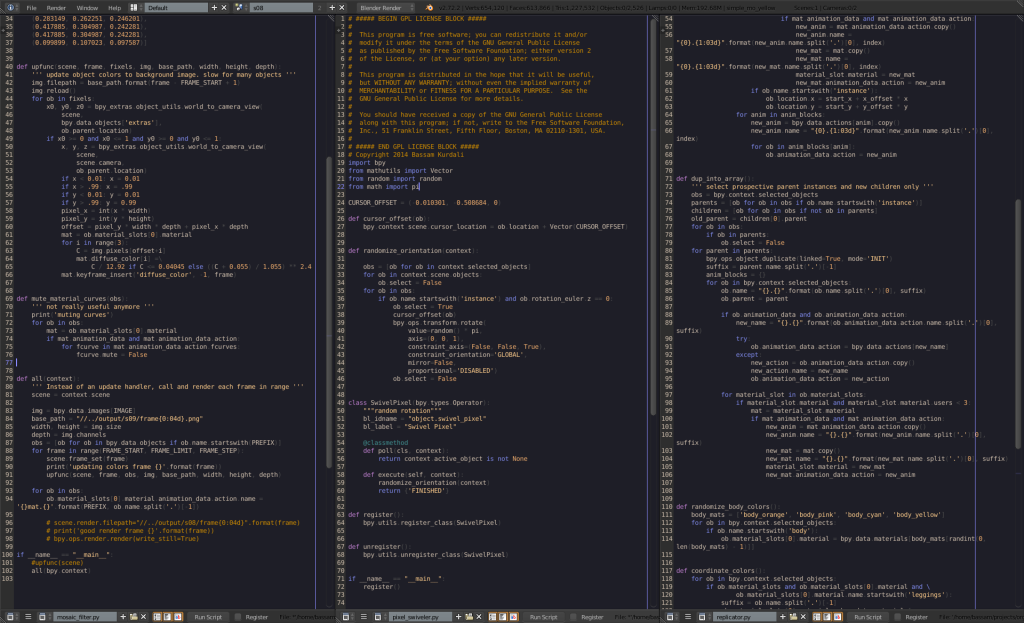

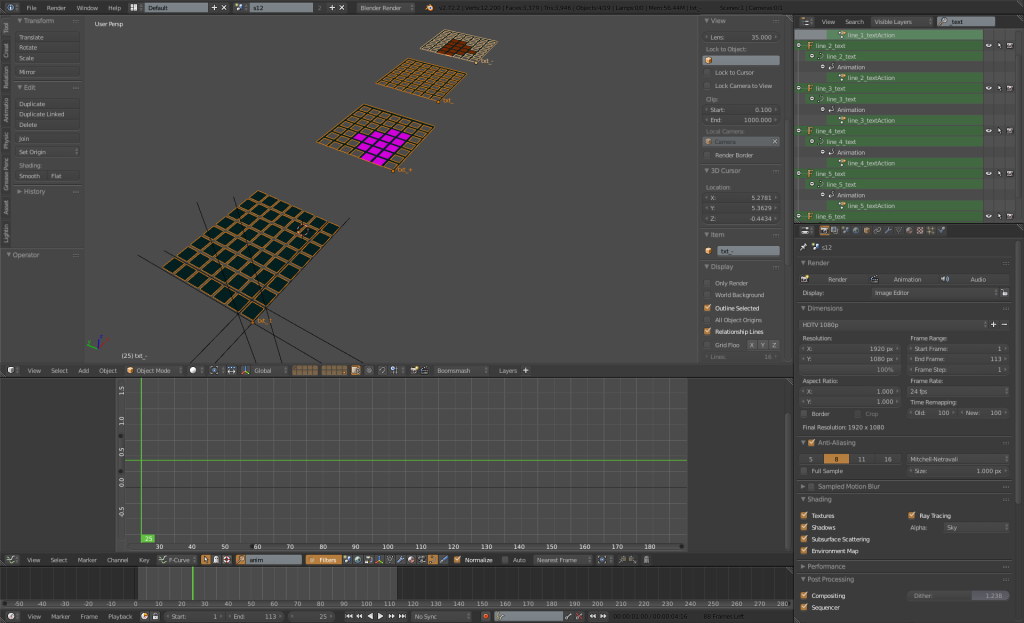

Text Effects

Well, there’s lots of text in this short. And I wanted to have it typing, randomly animating, with a blinking cursor (sometimes) at the end. Luckily, much earlier, I had written a small addon for Jakub Steiner to do a ‘typewriter text’ effect. I much later built it into a bigger system of text effects called… well, TextFX. This allows you to stack different text segments into a single Blender Text Object, and then stack multiple Animations on each Segment (like typing, blinking, changing material or font, etc.).

I could then also use Blender’s own animation tools, like putting text on paths, animating spacing or size, for a variety of typography animation. For instance, using a lattice deform to wrap text around a hammer (the lattice is one dimensional, uses linear deform, and wraps multiple times around the hammer) I got into some small issues with Blender’s text though:

The fill (tesselation) algorithm for text is very fast but also very ugly: lots of long triangles that don’t deform well. I used remesh to give me a quad grid, but I had to solidify the text first, as remesh only works on volumes. Then I hit a bug, either in the font or in Blender: at certain sizes, the tesselator fails to give a closed surface, breaking the solidify/remesh. I ended up using scaling instead of font size to avoid this problem.

Pixel Hammer

This was a fun one that I wish I had just a little bit more time to refine: I wanted all the Mos to hammer out colored circles, which would change color with each strike. As we zoom out, the individual circles become large pixels of the next shot: the “Software Highway” that the Mos themselves are creating through collaboration and sharing work. So first I animated one Mo hammering (actually, I stole the animation from a previous shot and made it cyclic) then exported a bunch of mesh-caches. All the Mos you see animated are using the same animation and the mesh cache modifier, with curves to make them a bit offset. As we zoom out the rest of the field is covered with non-animated simple Mo Meshes, that are too far away to see clearly. The pattern of the hammering is synced during the zoom out with frames from the next shot render, using a simple python script called ‘pixel_mosaic’ which is unfortunately slow to run (it takes longer than the render of the shot!). The shot is not bad, but if I had a bit of time I would have refined it more to be even better. One of the issues with working at this scope is organization, and I would have gone fairly insane without a bunch of python scripts for arraying and duplication, renaming, and timing to the animation:

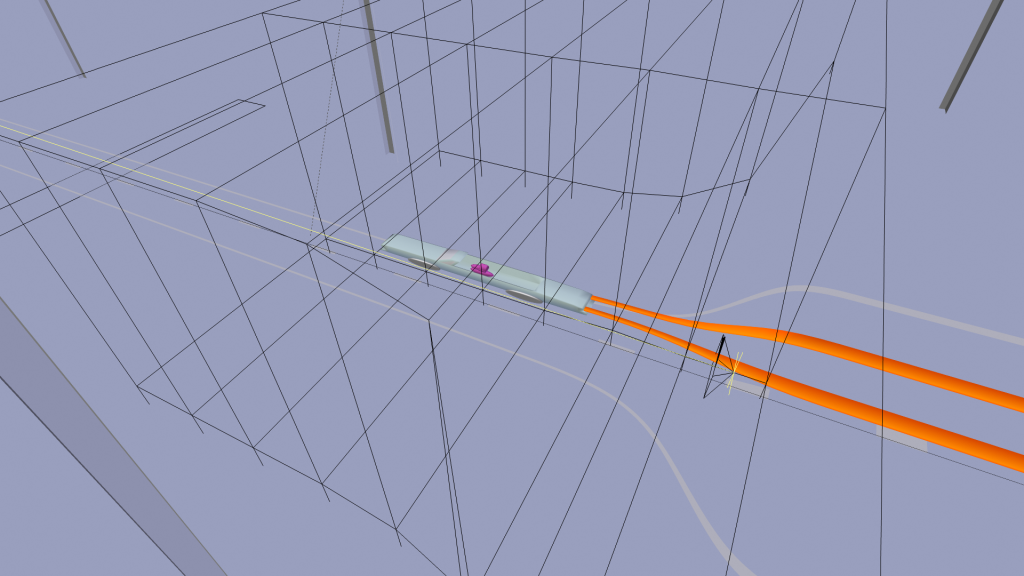

The Road

This one is fairly straightforward. I used Arrays and Curves to build the road and constrain the car motion. There is a certain design issue with curves deform: the bounds/fit options are on the curve rather than modifier. This means you need to duplicate the curve for the road in order to deform the cars, rather than use one curve for both. In practice, the hook modifiers I used to rig the road needed to be applied anyway or I got odd velocity changes on the cars.

One fun thing is having a lattice around the camera to simulate lens distortion, and scaling the cars as they pass the camera for a neat distorting motion-blur-like effect. At night the cars are just streaks of light, but in the daytime, the tailights have shapekeys that allow a real 3D motion blur effect.

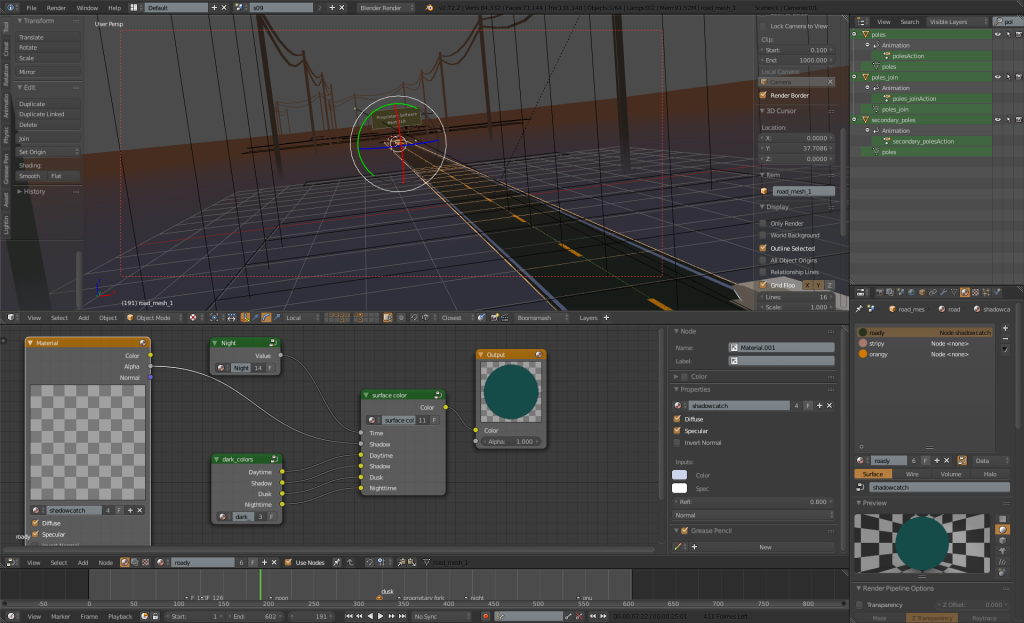

I used Blender Internal in this (and most shots) but I got into a small issue: I wanted shadows from the sun on a shadeless material. As a result, most of the surfaces use nodes, with various nodegroups that blend colors with a shadow-only material. To simulate lighting changes, a node group called ‘day_night’ outputs a single value that mixes in each materials day/night/dusk and shadow colors. Animating the one nodegroup (and the angle of the sun, and the world colors) allows a global change in lighting over the entire scene.

Stock Tickers

Here I used my text FX script but I found that it was too cumbersome, so I added a new animation mode to the addon: increment or ‘add’ that adds a number to the original string (assumed to be a float number or you’ll get a python error). That way I could animate the stock prices with one segment and a single curve – If you want to be fancy you could grab real data from a stock exchange for the prices! for the little up and down arrows I used Blender’s “object font”, as well as for the grid behind the numbers.

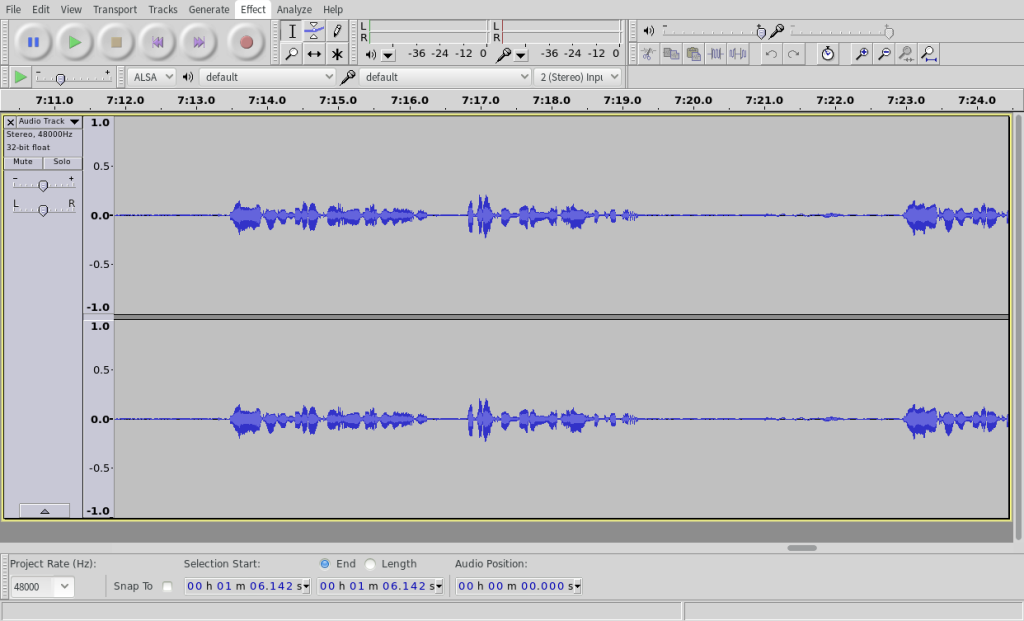

Voice Recording- remember to turn the fridge back on!

We are not primarily a sound studio, but we have a nice Studio Projects Mic (thanks for the recommendation Jan), a USB sound interface (a mic port pro), and lots of bookshelves to provide sound dampening. And with Audacity running on my laptop, we switched off the fridge (ominous foreboding). Sound recording went almost without a hitch. Had we the time, we’d have experimented more with performance. But the biggest disaster was when two days later Urchin’s artist-in-residence tried to eat some beans.

Editing and Sound

I really wanted a chance to evaluate Pitivi or another editing program in a production environment. But I was so down to the wire that I had to use Blender’s proven VSE for the editing. Without time or budget to arrange for a sound designer (I literally had no window of locked timing to send somebody) I was forced to do ‘sound design’ myself in Blender. Our friend Jim (James P. McQuoid) kindly offered to make some guitar/bass music so we got together online and on the phone, and he sent me some recordings he made in his home studio with analog effects and Ardour for the recording late on the penultimate day, when I was oh so close to locking picture. On my end, I couldn’t get Ardour running! So I edited the sound in Blender as best as I could. Blender’s not really ideal for sound design: you can’t move sounds on subframes, there is no level meter, no sound plugins, and even the levels you hear while editing might not be exactly what you output, especially if they are animated levels. With no time to spare for foley, I downloaded a tonne of sounds from freesound.org, an amazing resource. Most are CC 0, some are CC BY. Here’s a list of credits:

airtaxi benboncan cactus2003 crashoverride61088 dave-des davidbain ecfike elliotlp flint10 gchase hunter4708 irishcinema jamesabdulrahman jasonlon lavik89 lloydevans09 ludvique martypinso misscellany monotraum muses212 northern-monkey pandotrix-emark primeval-polypod simosco snapper4298 soundsexciting swiftoid wjoojoo

Rendering

With flat shaded materials most shots don’t have lights – Blender is actually very close to being able to render most these shots in OpenGL alone. However Blender Internal is quite fast especially with this setup. In the few cases where I wanted the appearance of lighting, I’d use separate materials with different brightness on the same object. The road shots were an exception with their ray traced shadows, but even those relied on animated colors instead of lighting.

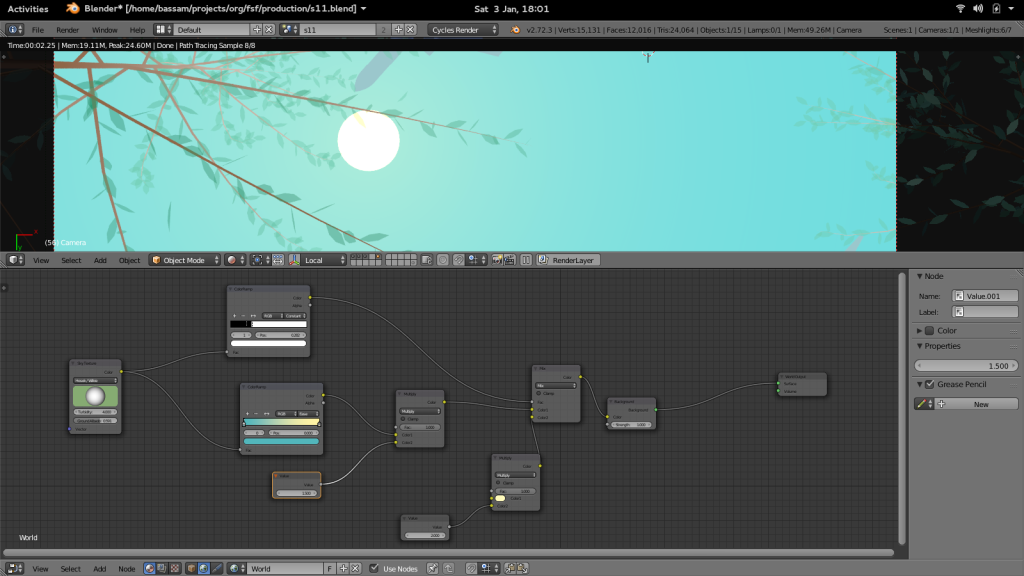

For the shot with the planes I used cycles, but only so I could process the world sun/sky via nodes to produce a cartoony sun. Internal doesn’t give nodes to the world material as far as I know.

Flat Shading, Soft Gradients and Banding

A small note about using subtle gradients and washes: they are highly susceptible to banding when rendered into 8 bit formats. This was an issue in multiple ways. Blender has a ‘Dither’ option in post processing that shuffles the pixels around and prevents banding in the 8bit png outputs. Inkscape does not; I ended up recreating .svg images within Blender to avoid the banding – making inkscape more of a mockup tool (for this project) than a production pipeline tool. The above picture uses the posterize filter in Krita to illustrate the effect of banding on the right side of the image.

Finally compression to video format further reduces the chroma information in the image and increases the banding even more. Here I resorted to adding a subtle grain to the images (you can find 35 mm grain videos online) on overlay at 30% just before compressing. This also adds a subtle grain which can be a nice detail. Those files are not free to reshare, it would be nice to have either full CC licensed grain files for use with open movies, or a grain node in Blender / grain effect in Pitivi for procedural grain.

Compression

I have a working knowledge of what containers and codecs are out there, as well as their relative free/non free status- but I am not an expert by any means, especially at the end of an 80 hour week/ 28 hour single ‘day’ marathon. So I opted to use Transmageddon to produce .webm files for the web. Initially I used Blender’s .ogv output for a test, but then used the lossless h264 mode to make a master.

A scheduled power outage forced me onto my laptop. Where Transmageddon/Gstreamer in Fedora 21 suddenly lost the ability to play h264 streams, due to a bug in Gstreamer, packagekit, or both. So I re-rendered my master in huffyuv (nicely avoiding h264 altogether), and went back to Transmageddon… which just didn’t work – no errors, it would just sit there, consuming 0% CPU. Finally I fired up Pitivi, dropped the huffyuv track into the timeline, and outputed multiple resolution .webm files from there.

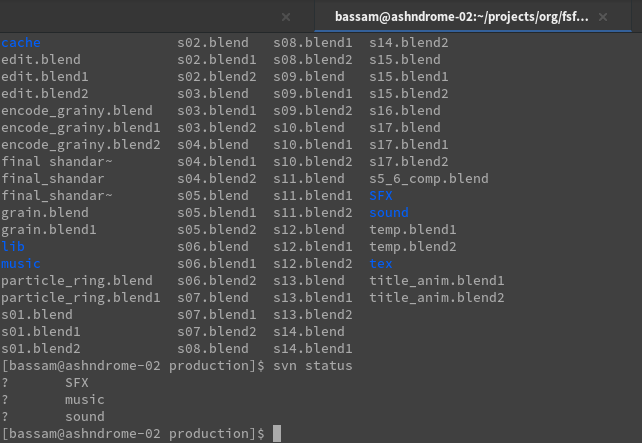

Production Files

BLAM! An open movie is born. We’re sharing all the production files — feel free to use them under a CC BY SA license, while all scripts with the project are under the GNU GPL (2 or later). You can use these to produce language localizations, look at how shots were made, or make your own new work. Download HERE.

If you want to make an (audio) localization of the movie, we’ve made a sfx/music track available for you to use with new dialog as a short-cut. You can download it HERE.

In Summation

Overall this project was a positive experience, with only a few things I’d change. There are some small glitches/ non-ideal details in some shots that I could fix, nothing huge (a dissolve here, timing change here, background change there), and some overall pacing I could tighten (the road shots, stock tickers and internet shot could be a bit shorter/ feel faster). Given some extra time, I’d have a professional sound engineer do the mixing and mastering, experiment with different deliveries of the V.O., and probably drop in a bit more music.

Bugs I should be filing:

- Tesselator for fonts fails for certain font/ size combinations in Blender

- h264 not working in gstreamer in Fedora 21: This is tricky as it has to do with multiple pieces of software: Gstreamer, PackageKit and RPMFusion (since h264 is non-free/ patent encumbered and not shipped by default in Fedora)

- Transmageddon failing weirdly in Fedora 21

Some list of wants in no particular order:

- Better Dependency and File-linking capability in Blender: probably coming by the end of 2015! This would allow a more fluid and cartoony Mo rig, and the ability to both link the character in and do custom deformers right in your animation files.

- Better Particle system, tessellation options for text in Blender: This isn’t really needed due to either workarounds or compromises for this project, but it would be useful for either speeding up workflow or enabling more elaborate effects.

- Out of the box working ‘pro’ sound in Fedora: I’d love to see Ardour3 actually work out of the box. I think sound on linux is ‘fixed’ for consumer uses thanks to pulseaudio, but the whole Jack/ Realtime kernel integration feels over-engineered and fiddly. even Audacity, which supposedly works with Pulse, required me to run it with pasuspender or it would crash.

- What seems like an oxymoron: ‘pro’ compression for dummies: a compressor/transcoder that is bullet proof, with constraints or presets for different devices/ presets, and perhaps quality preview for output – and the ability to save a batch script for multiple output targets and resolutions. What we have right now is either consumer oriented and limited (Transmageddon, Handbrake) or you get to fiddle with low-level codec knobs and break the output.. not fun.

- Support for .png and other image sequences in Pitivi for work with transparencies, masks, etc.

- Funnily enough, Firefox’s new h264 support crashes the browser on my computer. For now, I’ve avoided this by placing the .webm sources for video first, but this might break safari…

That’s all for now, I hope you found this post informative!

Well, no surprise probably that I’m excited about this post. But some really interesting stuff in here! I really like the way the hair was handled… clever and a bit surprising. I’ll also have to learn more about broken rigs. 🙂

Hope your fridge wasn’t too full!

We ate the chicken anyway!!

Heh yes in general out of the box professional audio requires some work for many distributions. In general we recommend that people use actually audio centric distros for these reasons. Pulse tends to literally just cause more problems than solve problems for professional audio sadly. It can be set up correctly to get out of the way, but often times this is not done by most common distros.

That being said, next time if you are working on it call me dang it. You should know by now I would be more than willing to help you get up and running on audio on Linux.

Seablade

Awesome! Thanks a lot for posting this great trip! 😀

[…] Dans le cadre de la célébration des 30 ans de la free Software Foundation, Libby Reynish a commissionné Urchn pour faire un court métrage pour la campagne de founds annuelle. En voici le Making-Of. […]

Great work bassam & fateh! I say this as a blenderhead and an fsf member 🙂 my highlights would be mo design, the awesome car trails and rms and hal as stocks 😀 and ty for a very detailed/informative making-of (really really useful!)

[…] The Making of User Lib […]

Hey guys – thanks for taking the time to do this write up. It is incredibly interesting to have the behind the scene view into production and all the little nuances that make it so. Congrats and fantastic end result.

[…] User Lib breakdown – Urchn shares details (and files) on their promo animation for the FSF […]

[…] the ones into this kind of work, and blender in particular, you can find a detailed explanation of the challenges that the makers of the work went through and how they fixed them right here. Definitely a great read into the whole process from design to finish. Great job guys! And of […]

fedora 21? i was using it for 2 months, greate experience, they ended up uploading broken wifi packages/kernel for final version, came back to ubuntu…

this is brilliant! thanks for sharing this with us!

am part of a 2 person startup doing film and animation.. we use blender for all our 3d work..

youve inspired me to try a 2d looking short!

Thanks so much this was a great breakdown of real world production problems. I loved the problem solving, I guess scripting is what made the process achievable in Blender. I wonder what is lacking in Blender that would otherwise have eased the production in something like After Effects?

Anyway, thank you for taking the time.

Great work! Congratulations!

I would love to see more of that about free software.

The guitar sound at the end is really awesome, can I find this somewhere?