Greetings comrades!

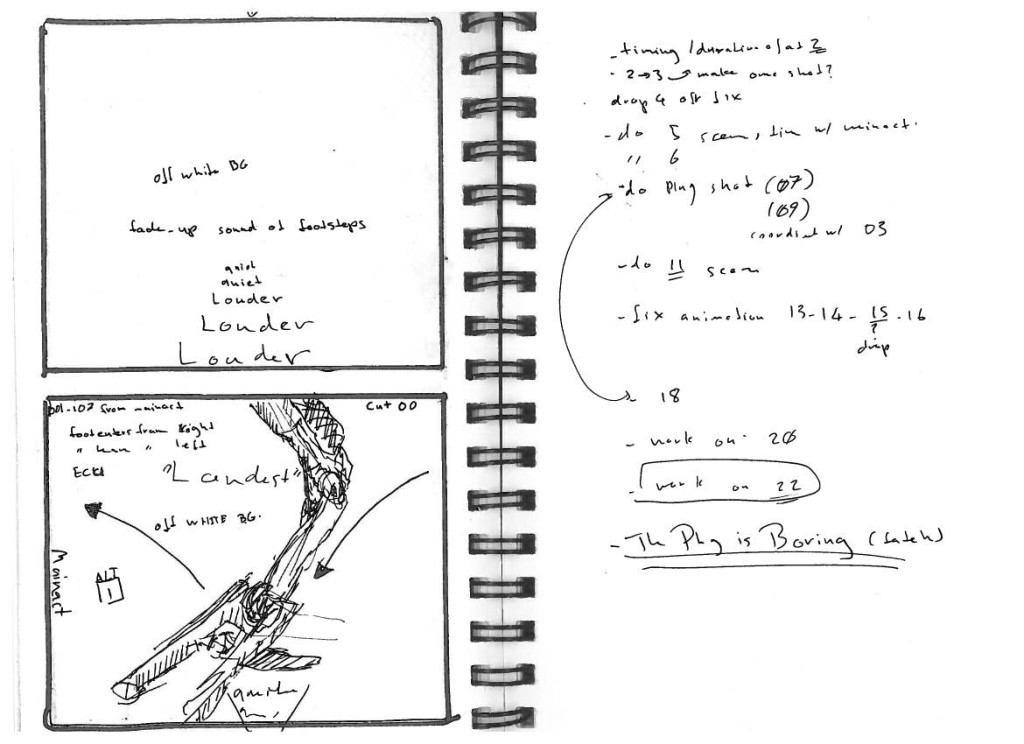

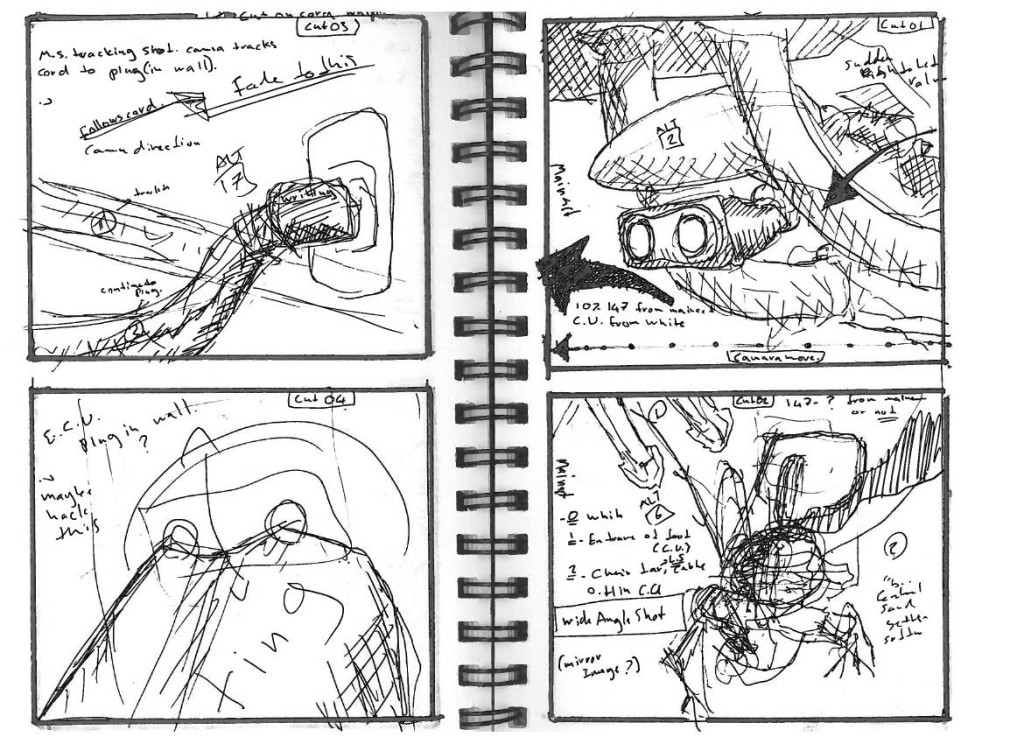

So as promised, here’s a more in-depth update, about poster timelapse . In our (hopefully just in the movie, but you never know…) dystopian future, the subway walls need to get peppered with images of successive ruthless dictators, bent on making us love them through propaganda. This effect is sometimes very close to the camera, and sometimes in the background, throughout many shots.

Posters need to be added to the walls over time, then removed, and perhaps just have other posters put on top of them, with attention to the images (which image in the succession) overlaps (they need to be on top, rather than intersecting each other) and order of removal (posters under other posters can’t go first). In addition, the materials of the poster need to age, and posters under other posters can’t e.g. accumulate dirt, and could get ripped when the top poster gets removed… and .. and…. and…..

*deep breath*

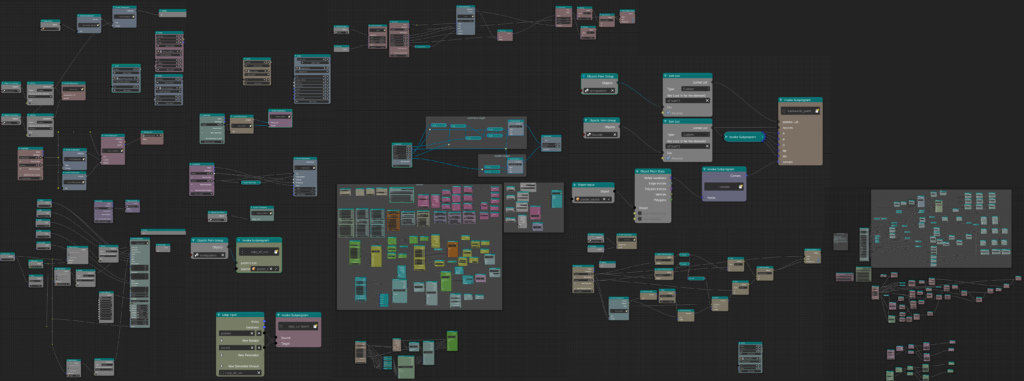

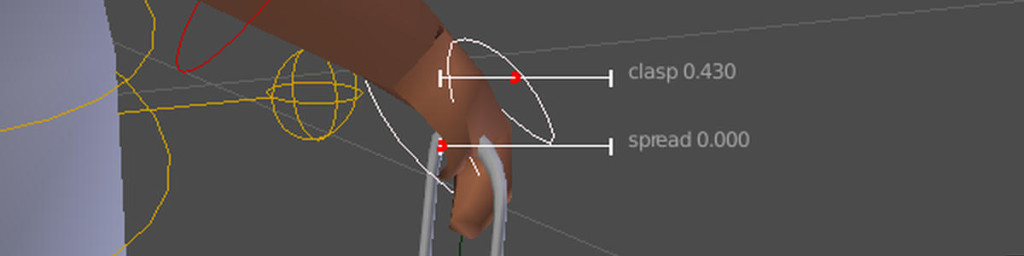

So I’m building a poster control ‘machine’ using a brilliant blender addon called animationnodes – that also allows mixing nodes and python via script nodes. This is what my code and nodes look like right now:

And this is what they do:

There’s a lot of hidden stuff there too: they make oclusion masks using vertex colors and vertex groups so the posters “know” when they are under or over each other. This will allow me to combine it with…..

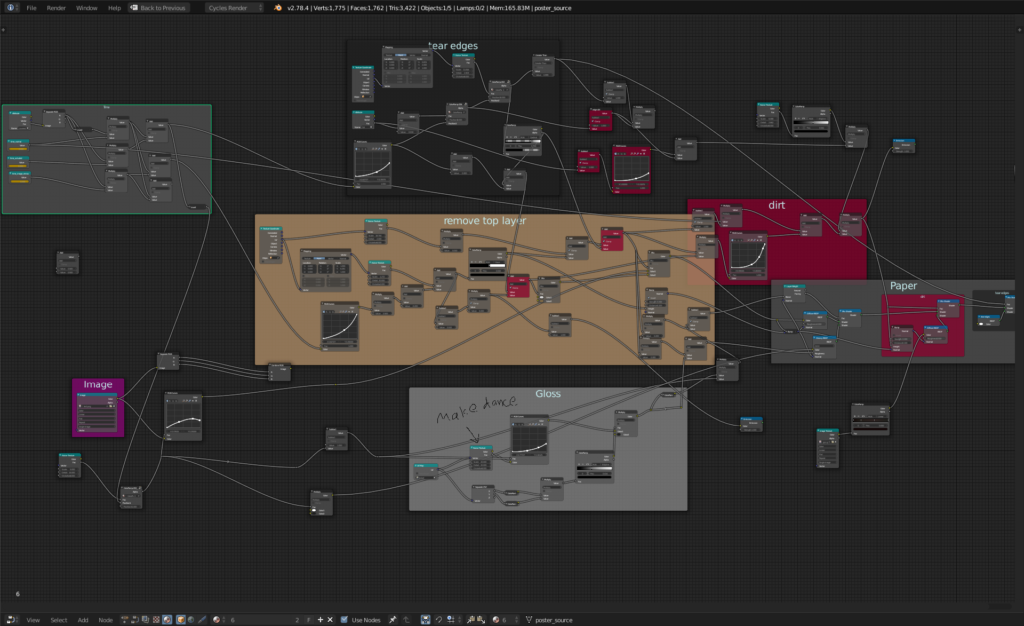

….my poster material nodes! :

Lets see what those look like in animation:

Phew! pretty cool – still missing a few details and tweaks, but that’s the basic idea. the strange purple rectangle represents an occluding poster. the image is tweaked from a beautiful poster made by Michael Kalinin for the movie, and is just a ‘test image’. The text is using our custom made font “soomerian modern” which all the text in the movie is written in.

So what’s left? well, combining the animation nodes for the posters with the poster materials.

In addition I have similar systems (not shown here) for the wall itself, that have to interact with the posters, so for instance, the posters change the dirtiness levels of the walls, and falling tiles rip out the posters.

Hope you enjoyed this mini update!