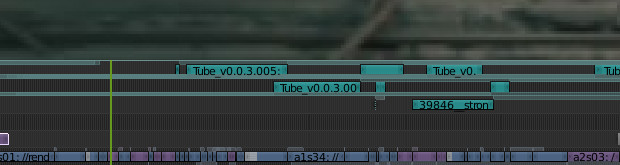

Blender’s Video Sequence Editor (or VSE for short) is a small non-linear video editor cozily tucked in to Blender, with the purpose of quickly editing Blender renders. It is ideal for working with rendered output (makes sense) and I’ve used it on many an animation project with confidence. Tube is being edited with VSE, as a 12 minute ‘live’ edit that gets updated with new versions of each shot and render. I’ve been trying out the Python API to streamline the process even further. So… what are the advantages of the Video Sequence Editor. Other than being Free Software, and right there, it turns out there are quite a few:

- familiar interface for blender users: follows the same interface conventions for selecting, scrubbing, moving, etc. Makes it very easy to use for even beginning to intermediate users.

- tracks are super nice: there are a lot of them, and they are *not* restricted: you can put audio, effects, transitions, videos or images on any track. Way to go Blender for not copying the skeuomorphic conventions that makes so many video editors a nightmare in usability.

- Since Blender splits selection and action, scrubbing vs. selection is never a problem, you scrub with one mouse button, select with the other, and there is never a problem of having to scrub in a tiny target, or selecting when you want to scrub. I’ve never had this ease of use in any other editor.

- simple ui, not super cluttered with options

- covers most of the basics of what you would need from a video editor: cutting, transitions, simple grading, transformations, sound, some effects, alpha over, blending modes, etc.

- has surprisingly advanced features buried in there too: Speed control, Multicam editing, Proxies for offline editing, histograms and waveform views, ‘meta sequences’ which are basically groups of anything (movies , images, transitions , etc) bundled together in one editable strip on the timeline.

- as in the rest of Blender, everything is keyframable.

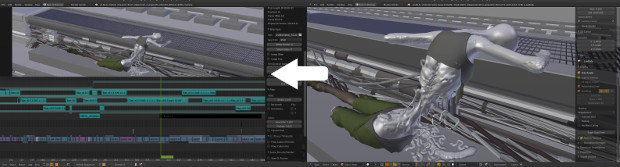

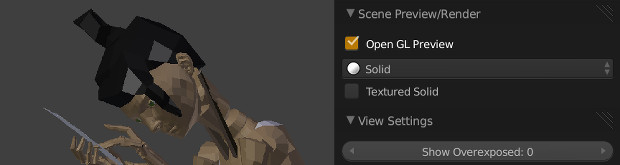

- you can add 3D Scenes as clips (blender calls them strips) making Blender into a ‘live’ title / effects generator for the editor. They can be previewed in openGL, and render out according to the scene settings.

- it treats image sequences as first class citizens, a must!!!

- Python scriptable!!!! big feature IMO. (uses the same api as the rest of Blender)

Disadvantages are also present, I should mention a few:

- UI is blender centric! so if you are not a blender user, it does not resemble $FAVORITEVIDEOEDITOR at all. Also, you have to expose it in the UI (only a drop down away, but most people don’t even realize it is there)

- no ‘bin’ of clips, no thumbnail previews on the video files, though waveform previewing is supported.

- lacks some UI niceties for really fast editing, though that can be fixed with python operators, and also is getting improvements over time.

- could be faster: we lost frame prefetching in the 2.5 transition, however, it is not much slower than some other editors I’ve used.

- not a huge amount of codec support: Since Blender is primarily not a video editor, supporting a bajillion codecs is not really a goal. I believe this differs slightly cross platform.

- bad codec support unfortunately means not only that some codecs don’t work, but that some of the codecs work imperfectly.

- needs more import/export features (EDL is supported, but afk only one way)

- some features could use a bit of polish. This is hampered by the fact that this is old code, a bit messy, and not many developers like to work with it.

Needless to say this is all ‘at the time of writing’. Things may improve, or the whole thing gets thrown into the canal 😉

So what have I been up to with Blender’s video editor? Quite a bit! Some of it may end up not-so-useful in the end, but experimentation could yield some refinements. The really good thing about using Python, is that I can ‘rip up’ complex things and rearrange / redo them. So the experiments don’t result in a huge waste. Lets have a peak.

Automatic Updates – take 1- ogler.py:

In the beginning of the project I thought to use Blender’s scene support to create a ‘live’ edit, that did not use video or image clips at all, but instead, referenced actual animation shots directly. This would be done by linking the shots into the edit as scenes, then using Blender’s scene strip support to edit them live as opengl previews. In my more optimistic moments, I imagined pressing ‘render’ directly from the sequence editor, and rendering the entire movie at once.

Early tests were promising, and in fact, for very small (say under 10 shots) this approach could work quite well. However, things were getting slow for Tube, as our shots were a bit too slow for editing live, and I wanted the speed of movie clips without the loss of the dynamic connection. Chris Webber, lead developer of GNU Mediagoblin, swooped to my assistance, and created ogler.py: A sequence strip addon that renders out the linked scenes into opengl previews, and then swaps them in the edit, but can swap them back to scene strips. so you can edit in the scenes, oglify them and edit fast, and then de-oglify to keep the connection live anytime you want.

Sadly, once tube got to around 30-40 shots, this became unworkable. Even loading the file with all the linked scenes would take for ever, and I started to run out of ram. It became clear that the memory requirements for the full movie (around 70 shots) would be too big to just link into one file. Sadly, I oglifyied for the last time, deleting the scene links as I went, leaving burning disconnection and lament, where once was a harmonious connected live edit.

Automatic Updates – take 2- smash_all.py:

After that, updates were not automatic. As shots were animated, I’d open the scene file, open gl preview it (henceforth, the verb is boomsmash) and refresh the edit. Sometimes I’d download a preview directly from an animator, or from helga, and skip the whole boomsmashing thing. With multiple animators working this got a bit tiresome and error prone, and slowly the videos files would drift from ogler’s handy folder, getting scattered on my harddisk, whereever they were dropped initially. Also, we started getting in rendered shots, so these found their way on the edit. These are managed quite well by Helga, as image sequences on the server. I would drop them into the sequencer via the mounted network folder, and they worked surprisingly well – fast, but not realtime. However, I’d every now and then drop a local render in too, complicating things again.

At this points I started to have two problems: One the setup was far too chaotic, and only worked on one workstation. Two, it was slow, and I need realtime performance to do more careful and artistic editing down the line. I decided to solve the organization problem first, as having a working edit is of paramount importance when reviewing and modifying animation shots, for the obvious reason that they have to work together in the edit.

I wrote a simple script called smash_all.py. First time you run it, it boomsmashes the entire list of shots into one folder- and saves the timestamps of the blend files it just rendered. Subsequent runs, it will only do this for changed files (by checking the timestamps), allowing me to run it to automatically updated progressed animation shots. Nice side-effect, all the movie files are consistently named, and live in one folder. The actual process runs for a bit, but, it can be run in the background with no user intervention.

The script is an addon, but currently has hardcoded shot list, and folder locations, it wouldn’t be hard to make a config file it could import instead – in any case, all the paths are at the beginning of the file, easy to edit. We could improve it by getting the user’s home folder from the OS module, making more paths relative to the blend file, and getting a few folders as options. I’d love to hear suggestions, as I’m not sure what the nicest thing would be.

Speed: Sequencer Proxies and proxy_workflow.py:

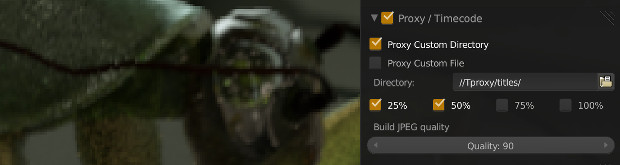

Blender as I mentioned has a built in ability to do proxies for editing slow footage (examples are hi resolution footage, exrs, footage that lives on a network folder, etc). They are a great way to speed up your edit, and might be a cool way to do an offline edit (I’m trying to see how possible this is with some help from python). Proxies are either movies or image sequences, and can be at 25 to 100% of the resolution of the original- but in jpg or avi jpeg formats, very fast. Each strip has a toggle to enable proxies, and then a bunch of options for desired sizes, timecode, and folder/file locations.

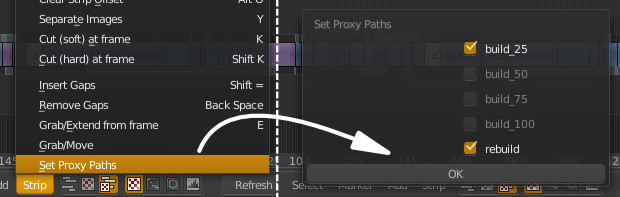

Since the strips I was using were at least partially on the server, I wanted the proxies to be local to my disk for speed, not on the same server with the rendered footage. So I needed the custom folder option, but… setting a custom folder for each and every clip one by one is just not an option for a lazy programmer (more seriously, it isn’t a good option for anybody). Since I plan to explore several workflow enhancements for proxies, I opted to make an single addon (proxy_workflow.py) that contains right now just a single operator, that gets a menu item, and a hotkey (ctrl-shift-a for now). The operator creates a folder ‘Tproxy’ next to the edit.blend file, and populates it with (hopefully unique) subfolders based on the path of each strip. You simply select all the strips you want to proxy, and it will create proxies (but only for movie and image files) in the selection. It also gives you a popup so you can select the sizes required, without having to go strip by strip. Maybe in the future we can make the path an optional entry, for custom workflows.

A known problem: if the same source image or video is used for multiple strips, it will not proxy it, but it will create the custom folder correctly. Simply select one of the strips and do rebuild proxies and timecodes, and all will be well.

Speed: Future work:

It seems that even with local on disk proxies, the edit hits the network for the source files every time you scrub over to a new clip. This means scrubbing within one strip is fast, but the moment you ‘step’ over an edit, you get a little lag, dependent on the network speed. At the Drome, this is almost imperceptible, but at home… the edit literally just hangs. I can think of a few solutions.

- bug report/whine to blender coders (or check the code myself) and see if this can be fixed in Blender. No need to check the source if you are using a proxy.

- temporarily change the source folder to some dummy location, and save the original location in a custom property, and use an operator to toggle back and forth.

- build the proxies, but then use them *as* the source, and switch back with an operator.

- do the ‘offline’ part completely outside of blender. just make (low fidelity) copies of everything, local to the edit, outside of blender, and work with that. Export an EDL, or just swap the ‘real thing’ in later. I would prefer to do things within blender, and not have to resort to this.

One would be best I think, unless there’s some reason this lookup to the original file needs to happen that I didn’t think of.

Proxies and Alpha:

This wasn’t done for Tube (it was a midnight hack for a commercial project under deadline) but I ended up using it on Tube. Simply put:

Since proxies are .jpg files they don’t have alpha channels. This can be an issue depending on your edit, if you want a smaller sized proxy of the alpha/image. To solve this I wrote an operator that uses imagemagick to create .tga proxies (bigger, uncompressed, and faster than .png or .exr files) instead, but then renames the extension to .jpg. Blender happily loads them as proxies, but the alpha still works.

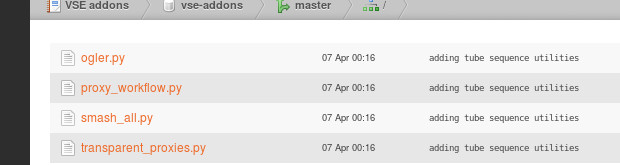

Where’s the code?

You can find the code, in its current, unpolished and silly state, on gitorious

“Disadvantages are also present, I should mention a few:

4. could be faster: we lost frame prefetching in the 2.5 transition, however, it is not much slower than some other editors I’ve used.

5. not a huge amount of codec support: Since Blender is primarily not a video editor, supporting a bajillion codecs is not really a goal. I believe this differs slightly cross platform. ”

Hasn’t there been recent work on 4? There are options in preferences->system to set prefetch frames and cache mem limit.

For 5 blender optionally uses ffmpeg which would mean access to as many codecs as you want, but the options listed in menus appears to be simplified. One thing there, is that the build of ffmpeg you use could have multiple codecs disabled, which may be indicated by the small options list. I doubt this list would be hard to expand with an addon/patch. Maybe a dynamic menu based on ffmpeg queries of available codecs? For osx/win builds you also get quicktime to access the proprietary codecs.

Interesting overview! I’m using the VSE myself and likes it. With a few scripts it’s actually quite good at editing.

There are however a few shortfalls. The most annoying would be that you actually dont know if the proxy in the timeline reflects the latest render or not. There is no way other than turning off proxy and manually check yourself.

Also missing bins as you pointed out is a huge disadvantage.

– True, having indication that a proxy is out of sync would be very nice, along with a refresh capability. I’m guessing it could be done by taking a time stamp of the original file/files, at the time of proxying , and check it. I wonder if this would be slow for image sequences (because they are lots of files)

– bins I’m on the fence about, I don’t see them as necessary but as one possible way to go. I would prefer rather than ‘implement bins’ that users who like them would write what things bins accomplish (for their workflow) and present that to developers instead. It is usually better to do this rather than demand a specific feature. Why do I say this? because video editors *suck*. They are awful – at least the mid range ones like final cut and premier – and should not be copied (I haven’t used avid or lightworks, so can’t comment there) If Blender can avoid the pitfalls of those editors, it can be good without having to copy every single little feature. This is a case where reinventing the wheel is fine, because everybody is using square wheels 😉

– of course, the coders might just decide ‘bins are the way to go’ – I just woudn’t force it.

Bassam, you have mentioned that other NLEs suffer from.. what exactly? Poor UI or workflow? How are they broken? I have found FCP (7 cannot speak for X) to be quite efficient, but I was raised on linear editing paradigms.

I would suggest that the bin and metadata deal is more suited to a less structured movie, live action as opposed to animated feature. Where one is often reconfiguring shot order based upon performance as well as story. Being able to manipulate and order assets based upon metadata in a flexible way is essential in that area.

Great post Bassam! Love to hear of real world problems and usage of the VSE for an actual production. I wonder though what are the problems with Final Cut and Premier? Why do they suck to you? Perhaps I am stuck with an old mind set – coming from linear editing days. I thought the point of bins and their associated metadata was for flexibility from the users perspective, allowing to rearrange assets based on metadata in an easy to read manner.

Certainly this access, to metadata that you can manipulate, is a hallmark of video editing (dramatic/narrative etc.) but not necessarily of animation. Which I gather is often much more rigid in structure and perhaps not as dynamic in edit construction?

I am often re-organising an edit based on downstream variables. Directors/producers comments or story notes (audience feedback – yech), run time changes, altered reshoots, etc. Or just reversioning which some hate, believing we should pre-edit on paper not in post. I think that this is half true. A script should get you a long way to finished but there should be room for creative modification. At which time you may need deeper more flexible access to your assets. Allowing the editor to sort and match, modify metadata and sift based on new requirements.

Great post Bassam! Love to hear of real world problems and usage of the VSE for an actual production. I wonder though what are the problems with Final Cut and Premier? Why do they suck to you? Perhaps I am stuck with an old mind set – coming from linear editing days. I thought the point of bins and their associated metadata was for flexibility from the users perspective, allowing to rearrange assets based on metadata in an easy to read manner.

Certainly this access, to metadata that you can manipulate, is a hallmark of video editing (dramatic/narrative etc.) but not necessarily of animation. Which I gather is often much more rigid in structure and perhaps not as dynamic in edit construction?

I am often re-organising an edit based on downstream variables. Directors/producers comments or story notes (audience feedback – yech), run time changes, altered reshoots, etc. Or just reversioning which some hate, believing we should pre-edit on paper not in post. I think that this is half true. A script should get you a long way to finished but there should be room for creative modification. At which time you may need deeper more flexible access to your assets. Allowing the editor to sort and match, modify metadata and sift based on new requirements.

posting here due to issues with our commenting:

and my reply to David McSween 🙂 :

thanks for the detailed answer, my suspicion is that you have far more experience with editing than I have!

1st premier: cannot comment on it today, since the last version I used was permier 4.x (before CS era):

— overcomplicated UI

— crashes frequently

— lots of issues with sync

— other,( I forget it’s been a while)

2nd: FCP:

— atrocious, inconsistent ui with loads of hidden surprises: depending on the type of clip or type of action different things happen ‘hidden’ as you adjust timing- meaning, move a bunch of things, get a bunch of problems scattered in the shot.

— all the problems with codecs I had with blender I had with FCP: this included h264 (which is not suitable for editing) so it doesn’t make a good online ‘through a bunch of footage at me’ editor, just like blender, you have to be careful what you feed it.

— errors or unhappiness with the codecs would result in glitches or dropouts in the final render, without rhyme or reason. for instance, a ‘bad’ clip at the middle edit would cause a different area of the edit to glitch on render, making it really hard to debug, because you could not assume where the glitch came from.

— having a working edit does not guarantee a good render of that edit.

— no built in proxy system that I could find: it is based on ‘losing’ and then reconnecting to the footage, an ad-hoc workflow that is not actually part of the program. I would prefer this to be managed.

coming to bins: It’s just that I haven’t had a workflow with them that felt organic: I use them when I have them, but they always felt like an extra thing: I suspect that this is because I didn’t do it right 😉 I think this comes to your metadata idea which is lacking in blender right now, but maybe not for long: It’s possible we can use custom data on strips.

I actually think blender could accomadate a ‘fast cutting’ along with a ‘bin’ based workflow at the same time: just allow adding stuff directly into the sequencer as now, so that the users don’t have to have a bin view open while they edit, but also add to the bin (this would mean that the way the strip data is stored would be different- strip->input clip rather than the strip having input connection directly…. maybe not so trivial.

My problems that I’m solving with blender are far more fundamental than metadata: it basically boils down to robustness and speed: I’d like to be able to edit really real time (no dropped frames) so I can see what I’m doing, so this meant some enhancements/workarounds to the proxy system. I’d also like to take an edit + proxies offline – in the networking sence, work on it, commit my work, and then reconnect to the network and get a correct render. this is pretty basic, and I like this stuff to be automatically handled. I can do this in blender with a bunch of addons, so I can get content.

Keep in mind: I’ve never edited a feature film, and never edited something for a director other than myself.

ahah, looks like some comments were incorrectly marked as spam. apologies.

Shane, some answers:

-recent work on prefetching was for the movie clip editor. prefetching still doesn’t work for the sequencer, and the ui options for it don’t affect the sequencer (just look at your cpu usage: it will remain single threaded)

-blender uses ffmpeg but strips out a lot of codecs. Also, just using ffmpeg doesn’t mean the codecs will work for editing, as many of them don’t store each frame, so you have to seek back or forwards to get a full frame and interpolate from there.

for example PiTiVi, kdenlive and cinelerra are serious editor project (probably not the only ones) and they each work on some kind of underlying framework, openquicktime in the case of cinelera, mlt in the case of kdenlive, and gstreamer/gnonlin/ges in the case of PiTiVi. Other projects can benefit from these libraries.

Bassam thank you for your clear and honest appraisal. I will agree that editing almost anything only benefits from accurate playback. I am amazed by the smooth replay from a fast efficient media player like VLC compared to poor old Blender. It’s like I am seeing the difference between reality and a dream that I have no say in and cannot control.

It;s also funny that when you turn off AV sync the playback performance often improves!

BTW I agree, when I recollect back to my early FCP days I was stunned that anyone would produce a confusing UI like that. Especially after spending years in Avids where so much is intuitive and clearly arranged.

Thinking about psuedo bins, i think that one could arrange strips in their own scenes for selecting a trim (see this script by Sunboy http://blenderartists.org/forum/showthread.php?264676-Sequencer-Addon-Trim-Videos-before-adding-them-to-the-Timeline-(In-and-Outpoints) )

To add meta data I would suggest linking markers to the text editor, so that you could add searchable text strings. When clicked on these would somehow move the play indicator to the appropriate marker in the active VSE timeline. Thus we would accrue many strips as independent scenes, but how then do we search the scenes for the neccassary media when we are sitting in the master scene? Hmmm. How can you access the “metadata” in those “strip” scene’s text blocks? But certainly it seems within reach.

But this doesn’t solve the playback issues… are those slowdowns caused by buffering or cacheing problems? Sergey has suggested that he is not prepared to port the Movie Clips caheing to the VSE 🙁

私は本当にこのことについて知りません。私は私の配偶者を知らないと私は自分の考えを信じて、私は単に反対することに同意するものとするつもりです。しかし記事をありがとう。 – 私はいつか私のレプリカの時計店での訪問をドロップ!

http://merollismicropolis.com/prostomag/0a0b7-60151-hamilton8.htmlハミルトン レディース,ジョシュハミルトン,ニナリッチ 香水 フルールドフルール

ハミルトン カーキ パイロット,ハミルトン 腕時計 メンズ カーキ,ニナリッチ 香水 トマト http://backyardlibertyaquaponic.com/prostomag/2004c-50156-hamilton59.html

はるか 情報 ?リターン私の中で便利な役立つのろわそれは本当にです。 あなたこと ができる {有用見つける |

ウェブサイト、ブックマークしました!個人的に

http://elisanews.com/prostomag/2275d-60157-hamilton63.htmlハミルトン カーキ ネイビー,ハミルトン カーキ チタン,ニナリッチ 財布 レディース

ハミルトン レディース 腕時計,ハミルトン 腕時計 メンズ クオーツ,ニナリッチ 財布 年齢 http://www.crosscityskate.com/prostomag/115e9-90153-hamilton25.html

http://gui-campos.com/prostomag/0eed8-80152-hamilton21.htmlハミルトン 腕時計 オープンハート,ハミルトン レディース,ニナリッチ 財布 ピンク

ハミルトン 腕時計 メンズ ベンチュラ,ハミルトン カーキ フィールド,ニナリッチ 財布 ピンク http://citizensalliance.org/prostomag/2c39f-00159-hamilton85.html

私はこの偉大な学びに感謝することを望みました!私は間違いなく、私はあなたがあなたのウェブサイト上で誰もがこの問題の更新を与えることに関連してどうもありがとうございます

は、あなたがこれらのブログにいくつかのソーシャルブックマークのリンクを追加することを検討しているフィードました。 myspaceのための非常に少なくとも。